The Logic of Sensor Fusion (And Why Your SIEM Needs a Trust Score)

In high-stakes environments like skydiving, trusting a single data source can be risky. If your altimeter drifts and you don't notice, you lose your altitude awareness. In a SOC, the stakes are similar: if an attacker blinds a sensor or tampered with logs, single-source alert logic will fail silently.

Most detection rules evaluate one signal at a time: A login event, a process execution, or a network connection. But attackers don't operate in single events; they operate in chains. In this project, I built a Python-based Trust Engine that uses sensor fusion to calculate real-time confidence scores across multiple telemetry sources with the objective of detecting not just what happened, but how reliable the data reporting it actually is.

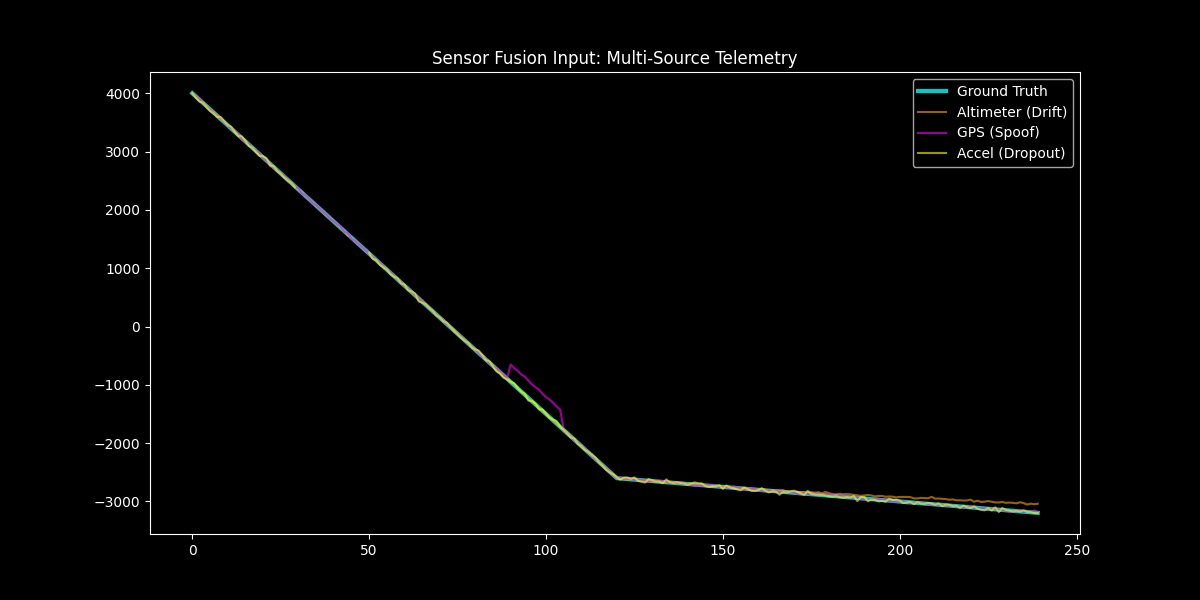

1: The Skydive Scenario (Simulating Multi-Source Telemetry)

I started by generating a realistic dataset representing a skydive with three telemetry streams:

- Altimeter: Primary altitude sensor.

- GPS: Secondary altitude verification.

- Accelerometer: Motion/velocity validation.

Then I injected three failure modes that mirror SOC blind spots: Slow Drift (Consistency), Sudden Spoofing (Integrity), and Signal Dropout (Availability).

Each of these happens in real SOC environments. The question is: can your detection system recognize when its own data sources are unreliable? The graph below shows what "telemetry under attack" actually looks like:

Artifact 1: Sensors vs Ground Truth Graph

Artifact 1: Sensors vs Ground Truth Graph

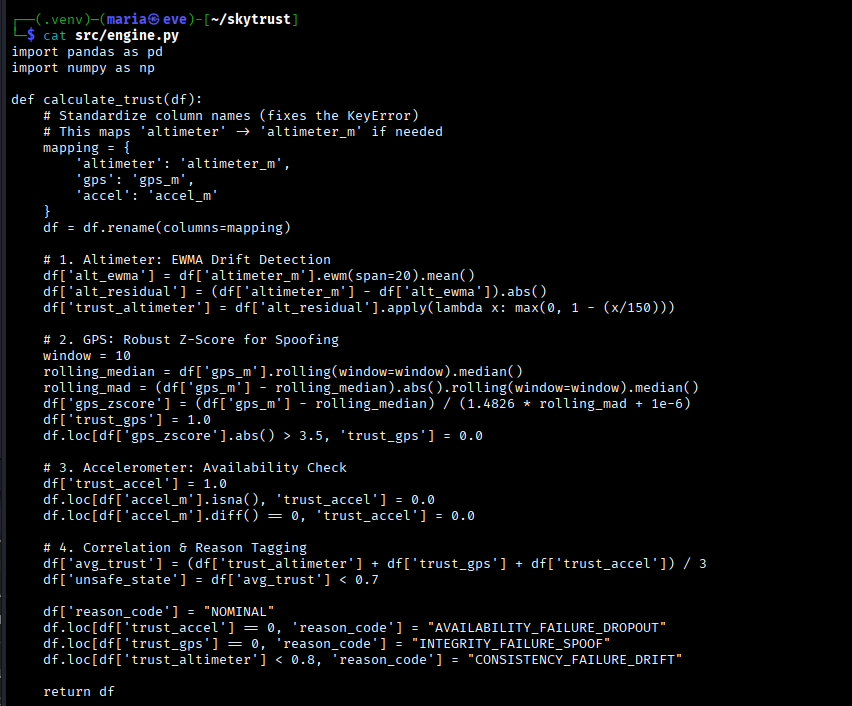

2: Implementing Trust Scoring (EWMA & Robust Z-Scores)

A common problem in both sensors and user behavior is Slow Drift; gradual deviation that doesn't trigger threshold-based alerts. Standard detections miss this because they evaluate single data points, not trends over time. To solve this, I implemented an Exponentially Weighted Moving Average (EWMA) to create a moving baseline. When the altimeter wandered, the engine calculated the residual and dropped the trust score.

To catch Spoofing, I used Robust Z-Scores based on Median Absolute Deviation (MAD). Unlike a standard average, MAD isn't skewed by the attack itself. When the GPS signal jumped, the Z-score spiked, and the engine instantly flagged the data as untrustworthy.

Here's the core logic for the trust engine calculation:

Code Snippet: EWMA and Robust Z-Score Logic

Code Snippet: EWMA and Robust Z-Score Logic

Artifact 2: Trust Score Degradation Graph

Artifact 2: Trust Score Degradation Graph

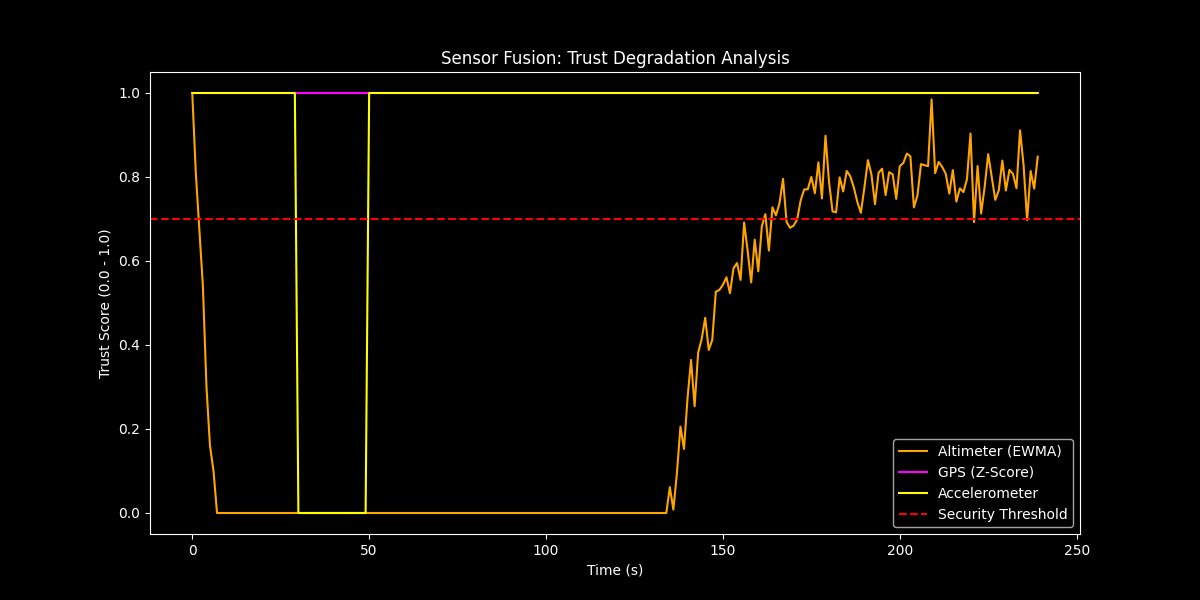

3: Weighted Correlation (The Verdict)

I built a correlation layer that averages trust scores across all three sensors and set a security threshold at 0.7. If the combined trust falls below this, the system triggers an UNSAFE STATE.

One anomaly is a fluke. Three simultaneous anomalies across Identity, Endpoint, and Network? That's an intrusion chain.

Terminal Screenshot: The transition from SECURE to UNSAFE

Terminal Screenshot: The transition from SECURE to UNSAFE

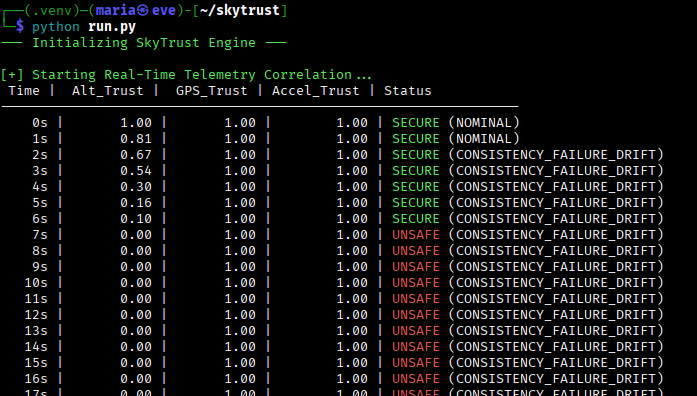

Analysis Complete: Final engine verdict and termination status

Analysis Complete: Final engine verdict and termination status

Conclusion: In a hostile environment, trust is a variable, not a constant.

Building this engine reinforced a core principle: You cannot trust a single sensor in a hostile environment. Attackers don't just exploit vulnerabilities; they blind the systems designed to detect them. Sensor fusion isn't just for aerospace, it's how mature SOCs evaluate the truth.

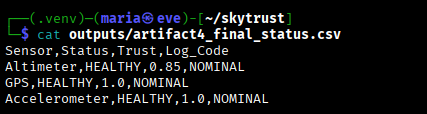

The final mission report shows the structured incident logging generated by the engine:

Final Status Table: Structured output for SIEM ingestion

Final Status Table: Structured output for SIEM ingestion

See the quick summary on Instagram @maria.cybersec.